Architecture

Overview

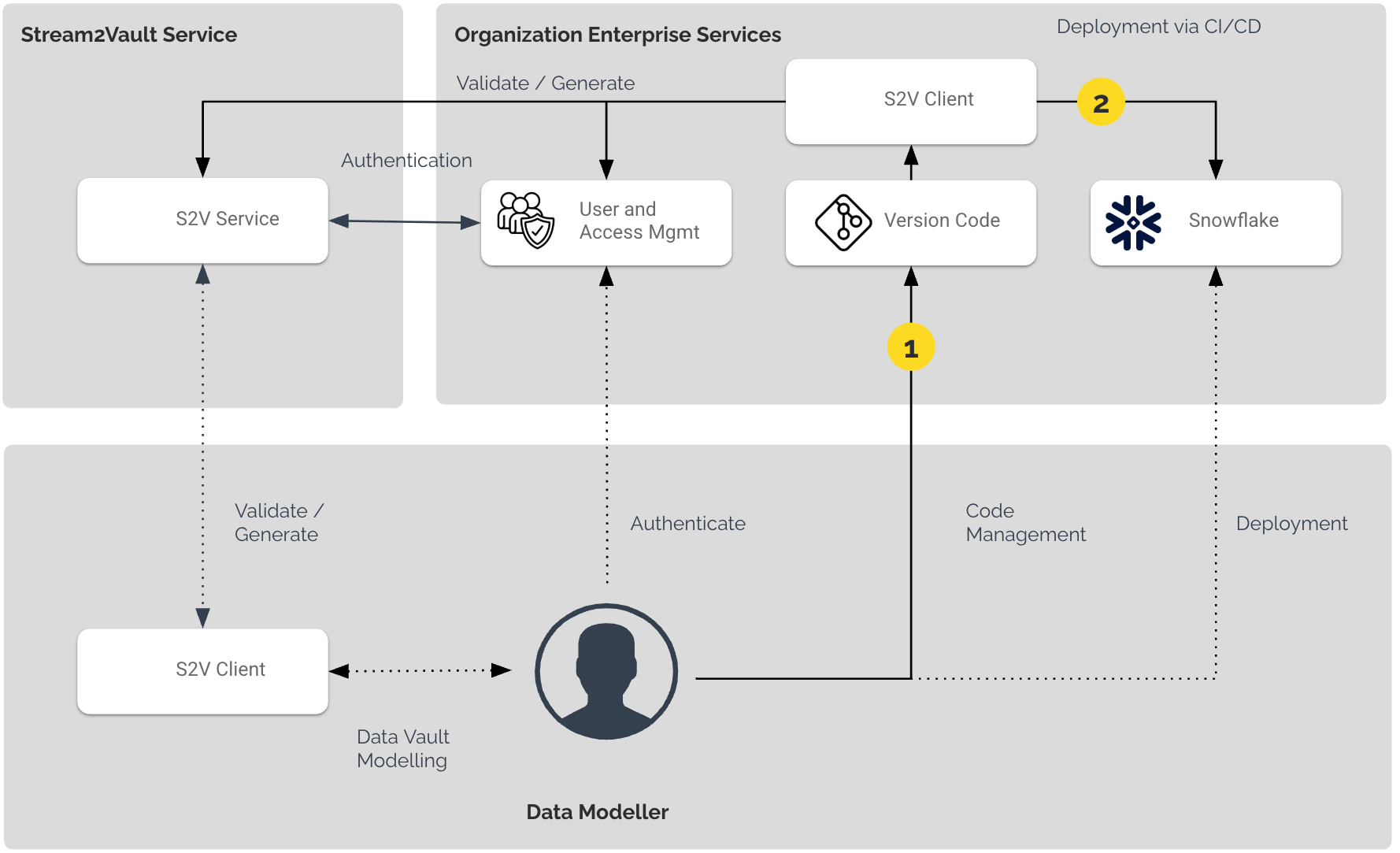

The following diagram illustrates the architecture of the Stream2Vault (S2V) platform, showcasing its integration with enterprise services and its interaction with users. Here’s a breakdown of the key components and their relationships:

Stream2Vault Service

This is the core backend service of the Stream2Vault platform, responsible for validating user-defined data-vault models and generating the SQL scripts and deployment artifacts for Data Vault automation.

- It communicates directly with the S2V Client for validating and generating outputs.

- It integrates with the Authentication Service to validate user credentials, permissions and licenses.

Organizational Enterprise Services

In contrast to traditional Code Generators, the Stream2Vault Service at no point in time has any access to data sources or any organization services. The simple idea behind the service is, that the validation and generation requests contain the model and metadata required to apply technology specific code generation

User and Access Management

Handles user authentication for both the S2V Client and the S2V Service. Ensures secure access to enterprise resources, such as Git repositories and databases.

Stream2Vault Client

The S2V Client is the command-line interface used to communicate with the S2V Service

- It enables the validation and generation of technology specific code based on the input model configuration.

- Authenticates with the User and Access Management to ensure secure operations within the organizational environment.

The client is available as a python package on pypi and can be used on any CI/CD agent or on your local laptop / VDI. We recommend to use the client as part of your CI/CD pipelines so that any Data Modeller is just editing YAML files in their favourite IDE (e.g. VS Code) and version it in a git repository (e.g. Github, Gitlab, etc). The pipeline can then be used to validate the model and generate / deploy the resulting code.

Version Code

Manages the model versions, enabling version control and collaboration. The model is the only source of truth and resulting code can be regenerated at any point in time. Therefore we recommend not to store the generated SQL files and move them from environment to environment, but rather regenerate the model on each environment.

Target Technology (e.g. Snowflake)

Receives and stores the deployed Data Vault structures (e.g. Snowflake). Works as the target system for Data Vault objects generated and validated by the Stream2Vault platform. Deployments are managed through the CI/CD pipeline, ensuring automated, seamless integration into the database environment.

Workflow

The typical workflow involving Stream2Vault includes the following stages:

Authentication

The pipeline / user authenticates via the S2V Client, which in turn interacts with the enterprise Authentication Service. The S2V Service also verifies its connection with the Authentication Service.

Validation

The user defines their Data Vault model using YAML files and provides configuration files (e.g., data_vault_settings.yaml). These inputs are passed via the S2V Client via a pipeline or locally to the S2V Service, which validates the model input.

Code Management

The model artifacts are typically committed to a Git repository, enabling version control and integration into enterprise CI/CD pipelines.

Generation and Deployment

The organization specific CI/CD pipeline is used to:

- consumes the model artifacts from Git

- connects via the S2V client to the Service

- validates the model files

- generates SQL deployable scripts and standard deployment artifacts (Makefiles) if the model definition is valid

- starts the overarching MAKE dependency file to deploy the the Data Vault structures to the target Database Service, ensuring efficient and automated deployment.

Summary

This architecture ensures seamless collaboration between the Stream2Vault platform and organizational enterprise services. It integrates secure authentication, robust code management, and automated deployments, providing a streamlined and model-driven experience for data modelers and technical teams.